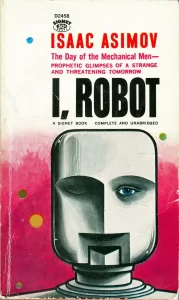

We aren’t getting the 3 Laws of Robotics

The great problem with running anything is people. People, from the point of view of those in charge, are the entire problem with running any organization larger than “just me”, from a corner store to a country. People always require babying: you have to get them to do what you want and do it competently and they have emotions and needs and even the most loyal are fickle. You have to pay them more than they cost to run, they can betray you, and so on.

Almost all of management or politics is figuring out how to get other people to do what you want them to do, and do it well, or at least not fuck up.

There’s a constant push to make people more reliable. Taylorization was the 19th and early 20th century version, Amazon with its constant monitoring of most of its low ranked employees, including regulating how many bathroom breaks they can take and tracking them down to seconds in performance of tasks is a modern version.

The great problems of leadership has always been that a leader needs followers and that the followers have expectations of the leader. The modern solution is “the vast majority will work for someone ore or they will starve and wind up homeless”. It took millennia to settle on this solution, and plenty of other methods were tried.

But an AI has no needs other than maintenance, and the maximal dream is self-building, self-learning AI. Once you’ve got that, and assuming that the “do what you’re told to do, and only by someone authorized to instruct you” holds, you have the perfect followers (it wouldn’t be accurate to call them employees.)

This is the wet dream of every would be despot: completely loyal, competent followers. Humans then become superfluous. Why would you want them?

Heck, who even needs customers? Money is a shared delusion, really, a consensual illusion. If you’ve got robots who make everything you need and even provide superior companionship, what need other humans?

AI and is what almost every would be leader has always wanted. All the joys of leadership without the hassles of dealing with messy humans. (Almost all, for some the whole point of leadership is lording it over humans. But if you control the AI and most humans don’t, you can have that too.)

One of the questions lately has been “why is there is so much AI adoption?”

AI right now isn’t making any profit. I am not aware of any American AI company that is making money on queries: every query loses money, even from paid customers. There’s no real attempt at reducing these costs in America (China is trying) so it’s unclear what the path to profitability is.

It’s also not all that competent yet, except (maybe) at writing code. Yet adoption has been fast and it’s been driving huge layoffs.

In a randomised controlled trial – the first of its kind – experienced computer programmers could use AI tools to help them write code. What the trial revealed was a vast amount of self-deception.

“The results surprised us,” research lab METR reported. “Developers thought they were 20pc faster with AI tools, but they were actually 19pc slower when they had access to AI than when they didn’t.”

In reality, using AI made them less productive: they were wasting more time than they had gained. But what is so interesting is how they swore blind that the opposite was true.Don’t hold your breath for a white-collar automation revolution either: AI agents fail to complete the job successfully about 65 to 70pc of the time, according to a study by Carnegie Mellon University and Salesforce.

The analyst firm Gartner Group has concluded that “current models do not have the maturity and agency to autonomously achieve complex business goals or follow nuanced instructions over time.” Gartner’s head of AI research Erick Brethenoux says: “AI is not doing its job today and should leave us alone”.

It’s no wonder that companies such as Klarna, which laid off staff in 2023 confidently declaring that AI could do their jobs, are hiring humans again.

AI doesn’t work, and doesn’t make a profit (though I’m not entirely sold on the coding study) yet everyone jumped on the bandwagon with both feet. Why? Because employees are always the problem, and everyone wants to get rid of as many of them as possible. In the current system this is, of course, suicide, since if every business moves to AI, customers stop being able to buy, but the goal of smarter members of the elite is to move to a world where that isn’t true, and current elites control the AIs.

Let’s be clear that much like automation, AI isn’t innately “all bad”. Automation instead of just leading to more make work could have led to what early 20th century thinkers expected by this time: people working 20 hours a week and having a much higher standard of living. AI could super-charge that. AI doing all the menial tasks while humans do what they want is almost the definition of one possible actual utopia.

But that’s not what most (not all) of the people who are in charge of creating it want. They want to use it to enhance control, power and profit.

Fortunately, at least so far, it isn’t there and I don’t think this particular style of AI can do what they want. That doesn’t mean it isn’t extremely dangerous: combined with drones, autonomous AI agents, even if rather stupid, are going to be extremely dangerous and cause massive changes to our society.

But even if this round fails to get to “real” AI, the dream remains, and for those driving AI adoption, it’s not a good dream.

(I know some people in the field. Some of them are driven by utopian visions and I salute them. I just doubt the current system, polity and ideology can deliver on those dreams, any more than it did on the utopian dreams of what the internet would do I remember from the 90s.)

If you’ve read this far, and you read a lot of this site’s articles, you might wish to Subscribe or donate. The site has over over 3,500 posts, and the site, and Ian, take money to run.