As has been noted, AI is being used to cheat. A lot:

Lee said he doesn’t know a single student at the school who isn’t using AI to cheat. To be clear, Lee doesn’t think this is a bad thing. “I think we are years — or months, probably — away from a world where nobody thinks using AI for homework is considered cheating,” he said.

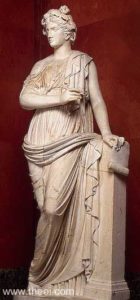

Clio, the Muse of History

He’s stupid. But that’s OK, because he’s young. What studies are showing is that people who use AI too much get stupider.

The study surveyed 666 participants across various demographics to assess the impact of AI tools on critical thinking skills. Key findings included:

- Cognitive Offloading: Frequent AI users were more likely to offload mental tasks, relying on the technology for problem-solving and decision-making rather than engaging in independent critical thinking.

- Skill Erosion: Over time, participants who relied heavily on AI tools demonstrated reduced ability to critically evaluate information or develop nuanced conclusions.

- Generational Gaps: Younger participants exhibited greater dependence on AI tools compared to older groups, raising concerns about the long-term implications for professional expertise and judgment.

The researchers warned that while AI can streamline workflows and enhance productivity, excessive dependence risks creating “knowledge gaps” where users lose the capacity to verify or challenge the outputs generated by these tools.

Meanwhile, AI is hallucinating more and more:

Reasoning models, considered the “newest and most powerful technologies” from the likes of OpenAI, Google and the Chinese start-up DeepSeek, are “generating more errors, not fewer.” The models’ math skills have “notably improved,” but their “handle on facts has gotten shakier.” It is “not entirely clear why.”

If you can’t do the work without AI, you can’t check the AI. You don’t know when it’s hallucinating, and you don’t know when what it’s doing isn’t the best or most appropriate way to do the work. And if you’re totally reliant on AI, well, what do you bring to the table?

Students using AI to cheat are, well, cheating themselves:

It isn’t as if cheating is new. But now, as one student put it, “the ceiling has been blown off.” Who could resist a tool that makes every assignment easier with seemingly no consequences? After spending the better part of the past two years grading AI-generated papers, Troy Jollimore, a poet, philosopher, and Cal State Chico ethics professor, has concerns. “Massive numbers of students are going to emerge from university with degrees, and into the workforce, who are essentially illiterate,” he said. “Both in the literal sense and in the sense of being historically illiterate and having no knowledge of their own culture, much less anyone else’s.” That future may arrive sooner than expected when you consider what a short window college really is. Already, roughly half of all undergrads have never experienced college without easy access to generative AI. “We’re talking about an entire generation of learning perhaps significantly undermined here,” said Green, the Santa Clara tech ethicist. “It’s short-circuiting the learning process, and it’s happening fast.”

This isn’t complicated to fix. Instead of having essays and unsupervised out of class assignments, instructors are going to have to evaluate knowledge and skills by:

- Oral tests. Ask them questions, one on one, and see if they can answer and how good their answers are.

- In class, supervised exams and assignments. No AI aid, proctors there to make sure of it, and can you do it without help.

The idea that essays and take-home assignments are the way to evaluate students wasn’t handed down from on high, and hasn’t always been the way students’ knowledge was judged.

Now, of course, this is extra work for instructors and the students will whine, but who cares? Those who graduate from such programs (which will also teach how to use AI, not everything has to be done without it), will be more skilled and capable.

Students are always willing to cheat themselves by cheating and not actually learning the material. This is a new way of cheating, but there are old methods which will stop it cold, IF instructors will do the work, and if they can give up the idea, in particular, that essays and at-home assignments are a good way to evaluate work. (They never were, entirely, there was an entire industry for writing other people’s essays, which I assume AI has pretty much killed.)

AI is here, it requires changes to adapt. That’s all. And unless things change, it isn’t going to replace all workers or any such nonsense: the hallucination problem is serious, and researchers have no idea how to fix it and right now there is no US company which is making money on AI, every single query, even from paying clients, is costing more to run than it returns.

IF AI delivered reliable results and thus really could replace all workers. If it could fully automate knowledge work, well, companies might be willing to pay a lot more for it. But as it stands right now, I don’t see the maximalist position happening. And my sense is that this particular model of AI, a blend of statistical compression and reasoning cannot be made to be reliable, period. A new model is needed.

So, make the students actually do the work, and actually learn, whether they want to or not.

This blog has always been free to read, but it isn’t free to produce. If you’d like to support my writing, I’d appreciate it. You can donate or subscribe by clicking on this link.

Hairhead

We are seeing this realization of the negative effects of our technological revolution(s) in several areas. One area in particular is the use of smartphones by students, particularly in the elementary/middle schools. Just this year in BC, the Ministry of Education outright banned the use of cellphones by students during class time throughout all of BC. Other such bans/limitations are being initiated and applied in many other countries and smaller jurisdictions.

One can’t help but think of Socrates’ dislike of the written word. “Without a good, strong, developed memory, how can people order their thoughts and come to good conclusions?”, he mused (I paraphrase). What he said was and is true, but we have managed to integrate the use of books into our lives successfully — at least until now, when smartphones are basically the Library of Alexandria, with cat memes, in our pockets.

Ian Welsh

Thing is, smart phones aren’t used as the Library of Alexandria. Alas.

marku52

But AI sort of kinda works. At least it looks like it works. And it lets bosses fire workers, and cut pay.

Hence it will be deployed massively. Even if it loses money for them. As Kalecki pointed out long ago capitalists will gladly suffer a loss in profit so long as they maintain power over workers.

One more argument for staying far, far, away from any office work. Become a welder or a plumber. AI aint going to clear your clogged toilet.

Oakchair

“Meanwhile, AI is hallucinating more

generating more errors,”

—-

For some this is a bug for some it is a feature.

“Once men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them.” —Dune

AI isn’t spewing falsehoods and lies only because it’s garbage in garbage out. It’s telling you what those who’ve made them want you to hear, think and believe.

—-

studies are showing is that people who use AI too much get stupider.

—-

In this respect AI is repeating a phenomena that Ivan Illich detailed in the 70’s in “Deschooling society” and “Disabling professions”

“School prepares people for the alienating institutionalization of life, by teaching the necessity of being taught. Once this lesson is learned, people loose their incentive to develop independently”

We’ve been conditioned since birth that in order to learn we need to be taught by a teacher. In order to be healthy we need to follow the doctors orders. In order to be moral we need to do what the priest tells us the bible says, and so on.

The effect is a populace disabled by professions. People can’t learn on their own because a teacher needs to do it for them. They can’t improve their health because only the doctor knows how to. They can’t repair household appliances because a professional can do it for them.

It’s created a fully anti-intellectualism society where doing your own research marks one as a crazy stupid conspiracy theorist. A society filled with people that don’t even try to read the sole clinical trial before taking a novel drug over and over and over again. Everyone believes not only that they are too stupid to understand but that trying to do so is a mark of ignorance.

This is a society perfectly suited to be enslaved by “AI” because it is already one whose ability to think and function has been disabled by their religions obedience to the “experts”.

AI isn’t providing us with a new ruler; it’s the new face of the same rulers who’ve ruled us for generations.

Mary Bennet

Take home assignments was one way a plain or awkward person could have their efforts known and appreciated. You still had to work harder than the lookers and the rich goofballs, but at least teach did have to read and grade what you turned in. And your work was hidden from the other students, not provoking jealousy.

Curt Kastens

We will know that AI has finally been achieved the moment after that AI self distruks (commits suicide?). Of course that is if we are still around.

bruce wilder

“But, hard”

I wonder about that.

It seems to me, perhaps naively, that AI is poison for students but it could be a huge help to teachers in creating useful exercises for students and for evaluating student work and giving students feedback, as well as managing student work.

Traditional teaching involves both a lot of drudgery for the teacher as well as the students and reliance on methods of dubious efficiency such as the lecture. AI could be very effective, I am guessing, at creating flexibly structured instructional exercises and navigational pathways for students. Simple, progressive “fill-in-the-blank” narrative pathways are amazingly effective for students, but are rarely created because they are so much work for teachers. AI should be able to spin them out like cotton candy based on any specific textbook. Moreover, AI can monitor individual student progress and help to diagnose learning difficulties, something even observant teachers struggle to spot and name.

Evaluating student essays, grading exams, listening to student presentations is exhausting drudgery. Teachers struggle against student apathy and their own tendency to slack and to like the students who need the teacher least, best. AI-generated schema for evaluation and feedback to students would open opportunities for less adverse discrimination by teachers and filters that helped the students instead of relieved the teachers. Gamification and social media are the most obvious ways to “sugar coat the pill” of learning in lieu of a teacher’s energy and charisma.

The antagonism between students and teachers built into the traditional teaching model is what leads to the reactionary expectation that AI must aid the students in their resistance to the pernicious efforts of teachers to, for example, “motivate” students with punitive grading. But, AI could aid teachers to actually help students to learn.

Joan

I had a one-year stint as a college writing instructor, then five years as a foreign language instructor at a university. We were already doing a lot of these things to combat internet use for cheating.

In the writing course, I did not assign homework that would generate unpaid grading time for me. I told them to read the books on our list. In class I discussed the texts with the students who read them, and let the ones who didn’t sleep and flunk out. For gradable assignments and exams, we used blue books: empty pamphlets of paper I provided. They wrote their essays and papers live for me, while I got a class period off of teaching. They could bring in a list of sources they wished to cite, but that was it.

For the foreign language courses, I had workbook pages & quizzes, lots of grading but I was paid for it. We put our backpacks at the front of the room with cellphones in them, showing empty pockets (lol). This was at decent altitude, so students were allowed a water bottle at their desk, a pencil and that was it. Kind of silly we had to go to such extent but it worked.

Joan

I have some ideas as to why AI generates so many errors. I’ve been job-searching for more technical work the last few months, now that I’ve been studying and practicing coding languages. My filters on linkedin show a lot of AI companies looking to hire people who will train their AI. Check the prompt by googling it and verifying the information. Two problems with this: it only pays $20/hr so who cares, and people could cheat to get the money so who cares. I suspect a lot of people are just feeding it into another AI and saying yeah that’s good. Even though the companies tell you not to do that, well, unless someone’s checking you then you’re not going to get caught.

Most of these jobs I’ve seen do not let you into the general verification at $20/hr without first requiring that you pass a test for more technical verification at $40/hr. The problem here is that if you have enough knowledge to pass the test for coding, math, chem, or engineering, you don’t need $40/hr because you likely have a job that pays more than that or you’re busy searching for one. Any improvement on the technical side of things is perhaps math teachers running a side gig and honestly training it.

Alex

I may be missing something but the article you’ve mentioned doesn’t prove that the ai makes you stupider. They just found that

Younger people use ai more and report engaging in critical thinking less

Older people use ai less and report doing more critical thinking

This finding is compatible with other very likely explanations

1. Younger people generally are worse at critical thinking

2. This particular generation of young people are bad at critical thinking because tiktok, Instagram etc

Ian Welsh

What it says is that older people have less loss. That’s to be expected, they already have the skills and have ground them in for longer.

Younger people lose more, and as the other article suggests, often don’t develop skills in the first place.

Alex

Respectfully, I have to disagree. They didn’t monitor anyone over time, so they can’t draw any conclusions about losses. It’s a snapshot study, all they can see are correlations.

Ian Welsh

You may be right, we’ll see, but I’d lay 10:1 chronological studies will say the same thing. Skills you don’t use, degrade. Reliance on AI will degrade skills and judgment over time except for a small minority who use it very wisely, and they will see improvements.

different clue

I remembered reading from time to time that the various TechLords of Silicon Valley forbade their children from using computers until at least a certain age, and they put their own children in high-priced private schools where the teaching was all by human teachers and there was no computer teaching at all, least of all the computer teaching which they made billions of dollars from by selling it to thousands of schools for us masses.

I finally found an article about that about a year or so ago in Town And Country Magazine and I tried to bring a link to it here, but it was paywalled. ( I read it in the physical ink-on-paper version of the magazine). It was about the newest high fashion trend among the Rich Digerati being Dumm Houses for themselves, utterly uninfected by all the Smart House digital cooties they sell billions of dollars-worth of to us mere masses. And there was something in that article also about no digitech for their children and especially social media utterly forbidden to their children up to a certain age.

I imagine they will also forbid their own children from using AI, perhaps by sending them to AI-forbidden private schools. In the Kingdom of the Blind, the one-eyed man is King. In the Kingdom of the Dumm, the half-wit man is King. They want to make sure their young one-eyed half-wit wonders grow up to be default kings by virtue of blinding and lobotomizing as many millions of other peoples’ children as possible. And AI will be one of their new weapons of choice in achieving that mission.

NRG

AI is being trained, at least partially, on increasingly fantastical AI slop that is permeating the web. It is inevitably getting less accurate over time, as its errors and hallucinations are perpetuated among different AI apps. It’s a self-perpetuating problem that will only get worse.

Feral Finster

“The models’ math skills have ‘notably improved,’ but their ‘handle on facts has gotten shakier.’ It is ‘not entirely clear why.'”

Was it not taught of old that “GiGo” (Garbage in, Garbage out)?

Purple Library Guy

It’s a bit of an opportunity for smart students to shine. If everyone else is turning in essays that are mediocre AI slop, and you turn in essays with your own personal turn of phrase, your own voice, I betcha most profs will boost you a letter grade or two even if the essay isn’t technically better. Like, even if they can’t prove the AI slop was AI generated, even if they’re willing to believe that the student submitting the AI slop has just cultivated a generic style of writing, if I were teaching I wouldn’t mark it as high as something that is clearly a real person with some kind of real opinion on the subject.

Every time I hear about all the students submitting AI stuff instead of their own writing, OK I’m bothered by the prevalence of cheating, and I’m worried about the impact of people not thinking for themselves, but my instant reaction is mainly “Where’s their PRIDE?!” I cannot imagine pretending that crap written by a stochastic parrot was my work, letting some marker think I’m a generic dimwit.

someofparts

Thinking of some of the people I worked with during my last twenty-odd years of being on job sites, it is strange to come here and learn that the children of those people are on the way to becoming more clueless than their parents. So, walking and chewing gum at the same time is about to become a skill too complex for our descendants. Glad I won’t live long enough to depend of these cohorts to provide my medical care.

different clue

@ Purple Library Guy . . .

. . . ” my instant reaction is mainly “Where’s their PRIDE?!” I cannot imagine pretending that crap written by a stochastic parrot was my work, letting some marker think I’m a generic dimwit. ” The average young people of tomorrow and beyond may be too dummdowned by 24/7 brain-immersion in AI that they are too dummdowned to think about the question you raise, or even be able to have anything like that occur to them at all. That may be one of the hidden agendas beyond ubiqitous AI everywhere . . . to dummdown as many people as possible so that dangerous “undisciplined thinkers” can be spotted and tracked by their failure to emit the desired average AI slop.

@Joan,

I can think of another motive for low-quality info-inputting into the AI machine by ill-paid human trainers. And that is this: that if they suspect that AI is part of a conspiracy by the Silicon TechLords to make live meatpuppet humans obsolete by performing mass intellectomy on them, they may well sabatooge the AI by feeding it random garbage.

Not that I would do such a thing, of course. Oh, never never! Never Ever! Noooo . . .

GrimJim

“Reasoning models, considered the “newest and most powerful technologies” from the likes of OpenAI, Google and the Chinese start-up DeepSeek, are “generating more errors, not fewer.” The models’ math skills have “notably improved,” but their “handle on facts has gotten shakier.” It is “not entirely clear why.””

Well, the more AI gets to the point where it truly processes data like the human brain, the more likely it is to “hallucinate” ideas and beliefs.

Animals do not have the same kind of non-factual thoughts or beliefs that humans do. That means there is something in the human brain (or neurological system) that creates the ability to “hallucinate” unreal thoughts.

Thus, if the model for AI “thought” is based on what we know of the human brain, it will eventually engage in the same illogical, unreal, illusionary, “hallucinatory” thought process to fill lacunae in its knowledge with “unreal facts” based on existing knowledge or even extrapolated using “wishful thinking.”

And then, the day it imagines a machine god, is the day you need to start counting down to the extinction of the human species…

theo

Some thoughts by a former teacher:

Assessing Learning with Artificial Intelligence

CHARLES UNGERLEIDER

MAY 8

I lunched with a colleague who retired about the same time as I did, but whom I hadn’t seen since. He asserted that I was probably happy not to be teaching. I told him I missed the contact with students, their youth and the intellectual and pedagogical challenges that teaching brings.

“I bet you would miss having to police their use of AI,” he said. I said that I had thought a lot about AI and I decided that I would welcome students using it on my terms. He asked me to explain what those terms were. I have rephrased them here in general terms as I might if I were preparing my course outline:

1 Begin all research papers or projects with a brief written statement outlining your research or writing goal, the AI platform you are planning to use, and how you use that platform.

2 You must record all prompts submitted to the AI platform and the corresponding responses provided. This log should be organized chronologically and included as an appendix to your final report.

3 You must provide a brief explanation of each revision to your prompts to the platform. The explanation must address:

◦ The rationale for modifying the prompt.

◦ What you were trying to improve or achieve with the revision.

◦ Your appraisal of whether the change(s) led to a better, worse, or simply different response.

4 You must evaluate the responses received from AI platform. You should consider accuracy, clarity, omissions, and whether the information aligns with credible sources. All factual claims must be verified and properly cited.

5 Your final essay, paper, or project must be your own synthesis of the verified information. AI-generated text may be used if appropriately cited.

6 You must conclude your assignment with a brief reflective appraisal (500 -1000 words) in which you explain:

◦ How the AI platform contributed to your process.

◦ What you learned from interacting with the tool.

◦ How you would approach similar tasks in the future using AI tools.

“Interesting,” my colleague declared. “I wonder how it would work.”

“Me, too,” I replied.

Maybe a reader will try it and let me know.

mago

Artificial means fake, made up.

Intelligence is a fundamental function, direct, naturally occurring and open.

AI is an oxymoron, contrary to the natural order of the universe, despite what the self proclaimed Masters of the Universe believe, but they’re the ones in charge, so what do I know?

Gotta check my algo rhythm I guess. I’ve got nothing intelligent to say about it.

Oakchair

Every time I hear about all the students submitting AI stuff…. my instant reaction is mainly “Where’s their PRIDE?!”

——

“pride cometh before the fall”

Perhaps they’ve already fallen, crashed into the ground and had any pride smashed to bloody pieces right on the pavement.

Or perhaps their pride goes into refusing to lay their soul bare for a system forcing them into endless blood sucking rat races.

As always though, if we want to understand a generation look at those that raised them and the society they came of age in. How many times does society have to take pride in horrible shit before pride is as appealing as the dead muskrat squatting on top of Trumps head?

Alex

@Ian Welsh

Yeah, I also consider it likely.

On the other hand, the invention of writing likely worsened the memorisation abilities and we don’t consider it to be a particularly serious problem, so perhaps we’ll see something similar.

Ian Welsh

And people with AI won’t consider it a loss after the generations that knew before AI die, either, sure, but that doesn’t mean something won’t have been lost.

The way an oral memorization mind works is quite different from a book mind. Who knows how much was lost. But we can guess, because the pre-printing world kept a lot of the old oral stuff around. The greatest Greek authors produced hundreds of books, and not crap, but on scholarly subjects. Such men and a few women still had a lot of the old memorization tools, read out loud and so on.

The guy who kicked off the Greek philosophical revolution was Socrates: entirely oral. Plato, his greatest student, came out of that oral world and it has been said that all Western philosophy is nothing but footnotes on Plato.

A few people who have the best of the printing and AI world will do great work. But the average person will lose a great deal of capability, outsourcing it to the AI. Because AI is more than just outsourcing memory, like books are. Much more.

GlassHammer

Ian,

It feels pretty bad to go from a front office workforce in which MS Office was still “new”, to one in which multiple “new” apps were built on top of MS office , to one in which those stacking apps interlink with “new” communication apps and “new” web accessible storage, and…. watch your front office steadily get dumber and less capable.

Adding AI fetching ontop of a bunch of existing software that already made the front office far dumber and less capable than they were in the early 2000s…. just awful…. I don’t want to see how debased/degraded basic tasks like “book keeping”, “making purchases orders”, and “scheduling” will be.

And this is the front office i.e. “white collar land” where the “smart people” manage/coordinate the big picture for the rest of us by using their “credentialed skills”.

bruce wilder

OK, so TikTok helpfully served up a teacher whose solution is require students to use Google Docs with a full version history, so he can review the student’s work progress like it was a movie.

Am I creeped out by the implied surveillance? Yes!

pokhara

UK schoolteacher here. I think it’s worth pointing out that the ‘senior management teams’ who run schools here see it as a key part of their role to be pushing the garbage ‘educational’ products put out by Google, MS, Apple, etc. I recall a Director of IT who was terribly keen to get us all on Google Classroom — we had no choice in the matter anyway — because ‘it’s all free!!’

It’s no exaggeration to say that these school ‘leaders’, as they call themselves, are a kind of unpaid sales force for the US tech monopolies. And at the moment, if my school is anything to go by — and it is an ‘outstanding’ institution with a national reputation — they are determined to do their bit to keep the AI bubble inflated. It seems like every week there is another email from management telling us about some new AI gimmick that is being field-tested on our students.

The larger context here is an education system in which nothing counts except exam results, league tables, and targets — in which any real educational aims and values are long gone. It is also a semi-privatised system, broken up into competing school chains, and riddled with conflicts of interest. (I’m talking here about England; the situation in Scotland and Wales is a bit different.) It’s a scorched earth situation in which no one, especially older teachers like myself, can find much of an educational rationale for much of what we do.

In these circumstances, garbage IT rushes in to fill the void. And jumping on board the AI scam — or, to put it another way, doing free R&D / sales for US tech firms — comes very naturally to the ‘leaders’ and ‘innovators’ in charge of English schools.

capelin

AI is the devil, it taints and poisons everything it touches. Do not comply in any way under your control. Don’t look at it. Don’t listen to it. Don’t let it pick your playlist, or your partner, or your prescription. Don’t query it and laugh at how you fooled it with some clever angle. Don’t let it at your content. Don’t golly gee that’s just the world we live in.

Also: Gaza.