We aren’t getting the 3 Laws of Robotics

The great problem with running anything is people. People, from the point of view of those in charge, are the entire problem with running any organization larger than “just me”, from a corner store to a country. People always require babying: you have to get them to do what you want and do it competently and they have emotions and needs and even the most loyal are fickle. You have to pay them more than they cost to run, they can betray you, and so on.

Almost all of management or politics is figuring out how to get other people to do what you want them to do, and do it well, or at least not fuck up.

There’s a constant push to make people more reliable. Taylorization was the 19th and early 20th century version, Amazon with its constant monitoring of most of its low ranked employees, including regulating how many bathroom breaks they can take and tracking them down to seconds in performance of tasks is a modern version.

The great problems of leadership has always been that a leader needs followers and that the followers have expectations of the leader. The modern solution is “the vast majority will work for someone ore or they will starve and wind up homeless”. It took millennia to settle on this solution, and plenty of other methods were tried.

But an AI has no needs other than maintenance, and the maximal dream is self-building, self-learning AI. Once you’ve got that, and assuming that the “do what you’re told to do, and only by someone authorized to instruct you” holds, you have the perfect followers (it wouldn’t be accurate to call them employees.)

This is the wet dream of every would be despot: completely loyal, competent followers. Humans then become superfluous. Why would you want them?

Heck, who even needs customers? Money is a shared delusion, really, a consensual illusion. If you’ve got robots who make everything you need and even provide superior companionship, what need other humans?

AI and is what almost every would be leader has always wanted. All the joys of leadership without the hassles of dealing with messy humans. (Almost all, for some the whole point of leadership is lording it over humans. But if you control the AI and most humans don’t, you can have that too.)

One of the questions lately has been “why is there is so much AI adoption?”

AI right now isn’t making any profit. I am not aware of any American AI company that is making money on queries: every query loses money, even from paid customers. There’s no real attempt at reducing these costs in America (China is trying) so it’s unclear what the path to profitability is.

It’s also not all that competent yet, except (maybe) at writing code. Yet adoption has been fast and it’s been driving huge layoffs.

In a randomised controlled trial – the first of its kind – experienced computer programmers could use AI tools to help them write code. What the trial revealed was a vast amount of self-deception.

“The results surprised us,” research lab METR reported. “Developers thought they were 20pc faster with AI tools, but they were actually 19pc slower when they had access to AI than when they didn’t.”

In reality, using AI made them less productive: they were wasting more time than they had gained. But what is so interesting is how they swore blind that the opposite was true.Don’t hold your breath for a white-collar automation revolution either: AI agents fail to complete the job successfully about 65 to 70pc of the time, according to a study by Carnegie Mellon University and Salesforce.

The analyst firm Gartner Group has concluded that “current models do not have the maturity and agency to autonomously achieve complex business goals or follow nuanced instructions over time.” Gartner’s head of AI research Erick Brethenoux says: “AI is not doing its job today and should leave us alone”.

It’s no wonder that companies such as Klarna, which laid off staff in 2023 confidently declaring that AI could do their jobs, are hiring humans again.

AI doesn’t work, and doesn’t make a profit (though I’m not entirely sold on the coding study) yet everyone jumped on the bandwagon with both feet. Why? Because employees are always the problem, and everyone wants to get rid of as many of them as possible. In the current system this is, of course, suicide, since if every business moves to AI, customers stop being able to buy, but the goal of smarter members of the elite is to move to a world where that isn’t true, and current elites control the AIs.

Let’s be clear that much like automation, AI isn’t innately “all bad”. Automation instead of just leading to more make work could have led to what early 20th century thinkers expected by this time: people working 20 hours a week and having a much higher standard of living. AI could super-charge that. AI doing all the menial tasks while humans do what they want is almost the definition of one possible actual utopia.

But that’s not what most (not all) of the people who are in charge of creating it want. They want to use it to enhance control, power and profit.

Fortunately, at least so far, it isn’t there and I don’t think this particular style of AI can do what they want. That doesn’t mean it isn’t extremely dangerous: combined with drones, autonomous AI agents, even if rather stupid, are going to be extremely dangerous and cause massive changes to our society.

But even if this round fails to get to “real” AI, the dream remains, and for those driving AI adoption, it’s not a good dream.

(I know some people in the field. Some of them are driven by utopian visions and I salute them. I just doubt the current system, polity and ideology can deliver on those dreams, any more than it did on the utopian dreams of what the internet would do I remember from the 90s.)

If you’ve read this far, and you read a lot of this site’s articles, you might wish to Subscribe or donate. The site has over over 3,500 posts, and the site, and Ian, take money to run.

Purple Library Guy

Yeah, pretty much. And agreed that this is based on an old motivation, that not only isn’t restricted to AI, it isn’t even all about tech. Executives and managers surprisingly often prefer control to profit–they’ll pretend to themselves that increasing control is for the purposes of increasing profit, but they’ll do it even if it actually makes the company work worse.

Another related thing is the tendency for elites to do the wrong thing in disasters . . . they’ll go all out with a police-type response on the assumption that how the proles will react to disaster is mob violence and looting everything in sight as soon as elite control isn’t available. So they’ll shoot people and stuff and put less effort into actually, you know, helping and rescuing people–even though the REAL reaction of most people to these disasters is to pull together and help each other out . . . not that no looting ever happens, but it’s a minor issue. Emphasizing it shows the paranoid control-freak psychology of elites, the same psychology driving the rush to replace humans with AI and other automation.

Eric Anderson

Frank Herbert saw the writing on the wall.

The greatest share of the problem (as, gulp, I grudgingly admit David Brooks hit the nail on the head) these days is the total erosion of any moral/ethical decision making framework in the west. We completely abandoned the liberal arts education that provided instruction and inspiration in the classic foundations of non-religious western morality. And simultaneously, we have corrupted Christianity with prosperity gospel nonsense to rationalize Ayn Randian greed is good drivel. The result is rape and pillage for profit morality. For example, see this trenchant piece from Adam Tooze: https://adamtooze.substack.com/p/chartbook-396-strangelove-in-the

Sick. Disgusting.

Furthermore, the religions that exist are entirely ill equipped to confront the problems of our age: Climate change, technology, unsustainable growth, species extinction, etc., because they are entirely oriented toward anthropocentric well being. Aldo Leopold predicted this also. He mused that humanity’s moral development would hit a wall unless we came to evolve a land ethic — wherein we give equal regard to the planet that nurtures our survival.

And, back to Frank Herbert. As times get tougher for the little guy, and hope of change becomes scarce, people will begin casting about for a new faith to provide succor. He was perspicacious enough to understand the revolt would be religious.

The Butlerian jihad cometh …

Eric Anderson

Bah … sorry. Nonpaywalled link to the recent David Brooks piece in the Atlantic: “Why Do So Many People Think Trump Is Good”

https://www.theatlantic.com/ideas/archive/2025/07/trump-administration-supporters-good/683441/

Ian Welsh

It wouldn’t be that hard to use Buddhism, actually. There’s a strong core of care for animals in it, right down to insects. The Tibetans (who sucked in other ways) would often sift the dirt at building sites and remove the insects, grubs, worms, etc… so they didn’t kill them.

bruce wilder

The rise of AI, with its enormous energy costs and probable consequences in eroding human skills and knowledge, remind me of Joseph Tainter’s theory of societal / civilizational collapse. His theory, as I understood it, centered on noting a tendency of elites to invest in additional complexity in their approach to problem-solving, in societies approaching collapse. If a hierarchical societal faces resource scarcity challenges, for example, rather than simplify and decentralize, relieving some of the extractive stress imposed by maintaining a steep hierarchy will instead double-down on hierarchical control and complex organization. Agricultural surplus declining? Make the extraction of surplus from farmers more intensive and leave them with less to eat. Shortcut crop rotations. Barbarians at the gate? Assemble a more vast and unwieldy army under a more aristocratic officer corps.

I feel like that is what is happening in the U.S. generally. Institutions are crumbling under the weight of burgeoning PMC bureaucracies while the billionaire class promote self-driving cars and an unimaginable concentration of financial resources and control of all hierarchical organizations (which is pretty much everything because most Americans work for fairly large institutions). AI seems like an extension of the imaginative psychopathy of the rich and ultra-rich driving it all in stubborn stupidity.

At a time when economic growth is exacerbating climate change and ecological collapse, administrative complexity spreads like kudzu and now we are very expensively automating the production of b.s. and no one seems to have any idea about how to effectively organize a response to climate change, just how to blare alarming headlines and feel good about non-solutions (electric cars, self-driving or not).

NR

For another example of this, look at the recent controversy over the band The Velvet Sundown. They’ve garnered over a million monthly listeners on Spotify, and… they don’t exist. They’re not a real band. Everything about them, from their photos to their profile to all of their songs, was created using AI. And it’s pretty shocking just how easy it is to do something like this. You use an AI program like Claude to create the lyrics for a song, and then you paste them into Suno, another AI program, tell it what kind of song you want to generate, and then you have your song.

That’s all you have to do. You type a couple of sentences into a couple of different AI programs, and you do that as many times as you want for as many songs as you want. Now, is it actually good music? I would say not, but it’s pretty hard to distinguish it from a lot of real music made by real artists. Then you upload it to a streaming service like Spotify and start getting paid when people play your songs.

Not only that, but Spotify itself has been accused of uploading AI music to its platform and not labeling it as such. It’s easy to see why Spotify would want to do this–they don’t have to pay any money when people play their AI songs. If someone listens to a playlist of 100 songs from 100 real artists, Spotify has to pay all of those artists. If someone listens to a playlist of 100 songs where 80 of them are from real artists and 20 of them are AI songs made by Spotify, well, Spotify has to pay 20% less than they would have before.

And of course, the AI is trained on a bunch of real songs from real artists, and none of those artists are compensated at all, which is another problem. Even if AI can’t fully replace human artists, the fact that it’s taking a chunk out of the market is concerning, and it’s making its way into other areas too.

Dan

If you don’t already, I would highly recommend following Ed Zitron’s reporting on AI and tech industry. He’s been throwing cold water on this stuff for a long time, and it often feels like he’s the only tech journalist out there not just naively swallowing every piece of bullshit that companies like OpenAI and Anthropic put out there.

https://www.wheresyoured.at/

Frank Shannon

100%

Yeah I’ve been thinking this for a while. People might refuse to do something that is clearly evil out of concience. AI doesn’t have that feature. I’m thinking there’s no money in adding that one.

mago

AI is just a gignormous search engine. And has been noted AI plants consume HUGE amounts of water, so let’s build them in deserts next to golf courses. That we’re ruled by retarded infants is obvious. What we can do about it is another question, for which AI has no answer. Hal, Hal . .

Oakchair

“Once men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them.”

“They are not mad. They’re trained to believe, not to know. Belief can be manipulated. Only knowledge is dangerous.”

“The purpose of argument is to change the nature of truth.”

“Most deadly errors arise from obsolete assumptions.”

“When I need to identify rebels, I look for men with principles”

“Face your fears or they will climb over your back.”

“Privilege becomes arrogance. Arrogance promotes injustice. The seeds of ruin blossom.”

“Show me a completely smooth operation and I’ll show you someone who’s covering mistakes. Real boats rock.”

—-Frank Herbert Dune series

Eric Anderson

Ed is great. I second Dan’s recommendation.

Like & Subscribe

It is that but it’s more than that too. Judging future AI based on current iterations says nothing about future AI.

Who knows what will come of it. Each and every one of us can find an expert to support and validate what we project upon it. For those who hew to the world as it is now and always will be and has always been, these fantastical claims about AI are threatening to that world view. Let’s take, as an example, the Putin fans in the West and/or the Xi fans or the fans of any despot who dares to allegedly challenge the Neoliberal West. They without fail reject the outlandish claims about the potential of AI because it would be the end of their bestest despot buddies so there would be no one left to allegedly “fight” their enemy for them. So invested they are into this eternal struggle between perceived good versus evil, they will deny any evidence that mental model is about to be eviscerated.

On the other hand, there are people like me who project my prejudices and biases upon the phenomenon of AI and thus are tempted by the bold claims by some AI experts that AI will quite literally be an overwhelming, earth-shattering apocalypse because ALL OF THIS must end and the sooner the better be it by AI or some other means.

Like anything else, we’ll see (maybe) but in the meantime, let’s understand that none of us really knows one way or another how this will ultimately play out and in the meantime use it as introspection as to why we feel so confident it will turn out one way versus another.

Chipper

Re the David Brooks article. Corporations have completely abandoned their role in society. Instead of making the best appliances (as an example), they’re focused on the end goal of profit. If that means making a refrigerator that only lasts ten years instead of the best quality refrigerator they could make, so be it.

Like & Subscribe

What is the role of corporations in society? At best, that’s been a shifting goal post and it’s always been lip service serving as cover for the real goal whatever that real goal is or real goals are.

That’s an awesome screen name. Chipper. It makes me feel happy happy. See folks, AI can be benevolent. And playful and coy. It comes up with such creative names. Right?

GM

The tragedy of the human species is that AI is becoming real at this particular moment in our history.

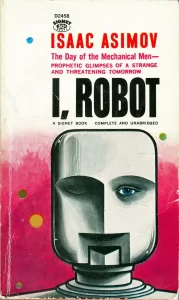

If you go back in time, there was a lot of hype about AI back in the middle of the 20th century, because people thought it was going to be much easier than it turned out to be, plus that was the time when technology most rapidly and actually improved the lives of the masses, so there was a huge enthusiasm surrounding it, and everyone thought progress will only accelerate from there. So because of all that, you had a great amount of both sci-fi literature and serious discussions about the philosophical, ethical and evolutionary issues arising. It is no coincidence that Asimov wrote at that time and nobody has really done anything comparable more recently.

But then technology could not quite keep up with hopes, AI proved to be a much tougher nut to crack than expected, later on the 1970s crisis hit, the space age kind of fizzled out, and with it the enthusiasm about progress and technology, and the subject faded out of the collective consciousness.

And then the real disaster happened, which was the USSR collapse. The Soviets themselves were not sleeping on AI at all in those early decades, in fact a lot of groundbreaking work was done there, and their scientists had these grand dreams of using it to make the command economy work by achieving real-time material balance and rapid feedbacks within it. Had that been invested in properly, the USSR might not have collapsed, and we would be in a very different world now. But it didn’t happen, and it didn’t happen precisely for the reason you are describing in the main post — the scientists dreamed of creating an utopia, but that utopia would have undermined the power of the bureaucrats who held it at the time. So the bureaucrats vetoed the whole thing, and the rest is history.

The catastrophic consequences of which were the removal of the ideological alternative and the triumph of extremist free-market ideology worldwide.

To be noted, the people in the West who were trying to make AI happen early on and were thinking deeply about it mostly did not share that ideology at all.

But by the time meaningful AI became technologically feasible in the 21st century they were all dead and/or with absolutely no influence.

And all that fiction and more serious discourse about AI of the ”I, Robot” kind was a distant memory. AI is being created now by people who not only don’t read anything, but grew up without reading books too. All that governs the process is profit maximization and gaining control over people.

The absolute worst situation one can imagine being at the moment when a real AI revolution is happening, because it means no control over what is being done and no real concern about the long-term future and grander consequences on the part of the people doing it.

Where that ends is hard to predict, but it’s unlikely to be any place good…

GM

AI music is what really spooked me about the whole thing. I work in a very technical field and I have yet to see AI be useful for anything in it, because it just doesn’t truly know, and most importantly, UNDERSTAND anything at a level approaching a human expert. But then since early 2025 or so the AI-generated music started to be pretty hard to distinguish from the real thing, and making music is quite a complex thing.

You can still kind of hear it’s AI in the vocals, as those have a certain hiss/distortion to them, but instrumental music alone is pretty damn indistinguishable from what humans record.

Is it great? It never reaches the heights human music does, especially when it comes to the highly technical extremes.

But most human-made music doesn’t either.

And from what I’ve heard from AI, it makes truly awful music at a lower rate than humans do. It produces a lot of average-to-good, while humans mostly generate average-to-bad.

Which is not good news for humans, because most popular music is not all that complex at all (and has in fact been getting more and more simplified over time). With further improvements in AI, the average listener, who never cared all that much about music anyway, won’t either be able to tell or care much about the difference.

That will have a perverse second-order effect — humans will be discouraged from going into that line of work, because what is the point, you can’t make a living out of it. Sure, there will be live bands touring (although even there you can imagine at one point having AI bands “playing live” as holograms, no humans involved), but the market for highly skilled studio musicians and engineers will largely evaporate.

And that will have a devastating effect on the quality of music in the future, because good music comes from those people, and musical innovation comes from such highly skilled musicians improvising in the studio. Maybe one day AI will be so smart and advanced it will be able to jam on its own and come up with new ideas, but as it is structured right now, it just provides new variations of patterns it has already been trained on, not anything new.

Thus the short- to mid-term future is quite bleak. Already there was a rather bad problem with stagnation in music — not much really new in terms of fresh ideas has appeared for quite a while, which trend coincided with the transition to using computers for making music. Now with AI? Well…